Mike Ault's thoughts on various topics, Oracle related and not. Note: I reserve the right to delete comments that are not contributing to the overall theme of the BLOG or are insulting or demeaning to anyone. The posts on this blog are provided “as is” with no warranties and confer no rights. The opinions expressed on this site are mine and mine alone, and do not necessarily represent those of my employer.

Thursday, December 04, 2008

Happy Holiday Shopping - Not!

Sears and Others:

http://money.cnn.com/2008/11/28/technology/bc.apfn.tec.holidayshop.ap/index.htm

http://tasquatch-sentinelling.blogspot.com/2008/11/blog-post_7519.html

Dr. Pepper:

http://www.bizjournals.com/atlanta/stories/2008/12/01/daily45.html

In order to understand what is happening you must understand what occurs when a user attempts to access a website, let alone when they attempt a complex transaction. When a user logs in to a website the user identification must be validated from a database using several database queries. For example: Is the user ID valid? Is the password correct? Has the password expired? What type of user is this? etc. It is even worse if the user has to create a login and password as well as enter other data such as address or credit data. All of this transactional traffic causes a flurry of underlying IO subsystem activity and web traffic across the networks. And of course all of this is magnified when actual query and sales transactions are also being performed.

All of the traffic to the system database can overwhelm the underlying IO subsystem, especially when it is disk array based which is the case with a majority of databases. Disks generally can only respond within a 5 millisecond window. Now, with on-disk caching and large caches in the disk arrays this response time can sometimes be reduced to 1 millisecond but as the caches flood with high activity performance generally drops to 5 milliseconds per IO or more. As the time to respond increases the number of users which can be served drops in a direct example of Little’s Law.

Unfortunately, while you can increase the IOPS (input output per second) by increasing the number of disks in an array, you cannot decrease the latency beyond that of any one disk. In fact, for a large read you will suffer from convoy effect where the slowest disk in the array involved in the IO operation will drive down the performance of the IO itself.

Several websites have found that by placing the user tables and other transaction dependent tables on low latency storage such as solid state SAN replacements like the RamSan 400 or 500 series from Texas Memory Systems they can dramatically increase the capability to support many more transactions (read clients) than before. An example of the dramatic improvements that can be achieved is shown in the recent press release from The Container Store group:

http://www.superssd.com/pressrelease/2008-12-02.htm

Realizing that their underlying IO subsystem couldn’t support the expected peak load generated from a positive blurb about the company on The Oprah Winfrey Show, the folks at The Container Store turned to TMS RamSan technology.

Another area that must support high concurrent logins and maintain strict inventories is in the online gaming community. Eve Online was able to go from 15,000 online users to over 17,000 with a 40x performance improvement:

http://www.superssd.com/success/ccpgames.htm

As a final example, IC Source first tried doubling the number of disks in their infrastructure; the net result? Zero improvement only to solve the problem with SSD (RamSan) technology:

http://www.superssd.com/success/icsource.htm

What all of these examples show is that many times your problem cannot be solved by throwing more disks at it, you must get to the ultimate problem, latency, to fix the issue.

With latency numbers of 15 microseconds (.015 milliseconds) and the resulting ability to support 600,000 IOPS and 4.5 GB/sec the RamSan-440 is the heavy hitter in the TMS line as far as throughput however, it is DDR RAM based and currently limited to 0.5 terabytes of storage capacity per unit. Compared to normal disk latency of 5 milliseconds the RamSan-440 shows a factor of 333 decrease in latency, even if you get 1.0 millisecond latency due to short-stroking and aggressive caching the 440 is still a factor of 67 times faster.

http://www.superssd.com/products/RamSan-440/

The RamSan-500 series utilizing Flash memory technology tops out at 2 terabytes (with promises to go to 8 terabytes in the near future) of storage capacity per unit and 200 microsecond ( 0.2 milliseconds ) peak latency with a minimal IOPS rating of 100,000 IOPS. Just to put this in perspective, EMC recently achieved 100,000 IOPS using 2-CX30 racks and 391 disk drives in a RAID0 configuration, the RamSan-500 does it with a single 4-U unit, and the RamSan-440 beats it by a factor of 6 in a similar footprint as the RamSan-500.

http://www.superssd.com/products/RamSan-500/

Being solid state (except for the cooling fans) means the RamSan technology is inherently more reliable and less prone to crashes. Current estimates of MTBF show a value of at least 500,000 hours per RamSan before a critical failure. With built in RAID, write leveling for Flash and the use of ECC memory as well as ChipKill the RamSans have built in redundancy. Utilizing Flash drives for backup, the 440 also provides unparalleled data persistence with triple battery backup ensuring that all data is written to Flash before shutdown. The RamSan-500 also uses battery backup to ensure that the 64 gigabytes of DDR cache is written to Flash on shutdown.

Another big movement is the green technology push we see today. I’ll leave the math on figuring the amount of electrical and cooling costs disk arrays to you, but at under 300 watts for the RamSan-500 and 600 watts for the RamSan-440 it is easy to see the cost savings from the energy footprint reduction.

So what should you take away from all of this? Essentially, if you have reached the latency limit of your IO subsystem, increasing the number of disks will not help. The only way to improve the performance of the system is to reduce overall latency. If you are an online retailer looking at the coming holiday season with dread because of performance issues, look at using SSD technology such as the TMS RamSan 400/500 series to slay the latency monster and achieve stellar website performance.

Saturday, November 08, 2008

From Mike at 30,000 feet

I have also been busy creating a demonstration database to show a side-by-side comparison of the differences in performance between disk and RamSan, after all seeing is believing as the old saw goes. I am writing this entry while flying over the mountains of Virginia and North Carolina on my way home from giving a one day tuning seminar in Stirling, Virginia at Oracles offices there under the auspices of the NatCap Oracle group. I leave again next week to give the same seminar to the Dallas Oracle User Group at the Oracle office in Plano Texas and from there go on to the Super Computing conference in Austin and then finally home again to Alpharetta for the Thanksgiving holiday.

I am also compiling a set of statistics to show the IOPS/gigabyte needed by Oracle databases of various sizes, in this task I will be gathering what historical data I have from past clients and if any of you have that type of data for your systems I would love to have it to add to the mix. If I have time I will try to come up with a query that shows this for a system and will post it. I imagine it will involve a rather simple sum of gigabytes (maybe just the active ones from dba_segments) and a v$sysstat capture of read and write IOPS. Here is a simple first cut, no doubt someone can make it simpler:

set serveroutput on

SET FEEDBACK OFF

col tod new_value now noprint

select to_char(sysdate, 'ddmonyyyyhh24mi') tod from dual;

TTITLE 'IOs Per Second'

col null noprint

select null from dual;

spool io_sec&&now

declare

cursor get_io is select

nvl(sum(a.phyrds+a.phywrts),0) sum_io1,nvl(sum(b.phyrds+b.phywrts),0) sum_io2

from sys.gv_$filestat a,sys.gv_$tempstat b;

cursor get_gig is select

sum(bytes)/(1024*1024*1024) from dba_segments;

gig number;

now date;

elapsed_seconds number;

sum_io1 number;

sum_io2 number;

sum_io12 number;

sum_io22 number;

tot_io number;

tot_io_per_sec number;

fixed_io_per_sec number;

temp_io_per_sec number;

iopsgig number;

begin

open get_io;

fetch get_io into sum_io1, sum_io2;

open get_gig;

fetch get_gig into gig;

close get_io;

close get_gig;

select sum_io1+sum_io2 into tot_io from dual;

select sysdate into now from dual;

select ceil((now-max(startup_time))*(60*60*24)) into elapsed_seconds from gv$instance;

fixed_io_per_sec:=sum_io1/elapsed_seconds;

temp_io_per_sec:=sum_io2/elapsed_seconds;

tot_io_per_sec:=tot_io/elapsed_seconds;

iopsgig:=tot_io_per_sec/gig;

dbms_output.put_line('Elapsed Sec :'to_char(elapsed_seconds, '9,999,999.99'));

dbms_output.put_line('Fixed IO/SEC :'to_char(fixed_io_per_sec,'9,999,999.99'));

dbms_output.put_line('Temp IO/SEC :'to_char(temp_io_per_sec, '9,999,999.99'));

dbms_output.put_line('Total IO/SEC :'to_char(tot_io_Per_Sec, '9,999,999.99'));

dbms_output.put_line('Total Used Gig:'to_char(gig, '9,999,999.99'));

dbms_output.put_line('Total IOPS/Gig:'to_char(iopsgig, '9,999,999.99'));

end;

/

spool off

ttitle off

set feedback on

Here is what the output show look like and as you can see it will generate a report as well:

Fri Nov 07 page 1

IOs Per Second

Elapsed Sec : 132,900.00

Fixed IO/SEC : 3.93

Temp IO/SEC : .01

Total IO/SEC : 3.94

Total Used Gig: 1.34

Total IOPS/Gig: 2.94

Well, I will sign off for now, hope to see some of you at the Dallas Bootcamp seminar and more at the Supercomputing conference.

Monday, October 06, 2008

Two Tales

Tale 1:

In 1979 or so a man who called himself R.C Christian (not his real name) came to the office of the Pyramid Quarry in Elberton, Georgia and contracted them, as the representative to several private individuals, create a large granite monument (the town of Elberton is the self proclaimed Granite capital of the world.) The design and location of the monument was provides and in March of 1980 it was erected by the Elberton Granite Finishing Company at a cost of approximately $41 million. Known as The Georgia Guidestones it sits outside of Elberton, Georgia on one of the tallest hills in Elbert county Georgia.

The Georgia Guidestones

The monument consists of 4-standing stones with a central Gnome stone and capstone. The faces of the standing stones are all inscribed with the same set of guidelines, each in a different “modern” language:

Maintain humanity under 500,000,000 in perpetual balance with nature

Guide reproduction wisely — improving fitness and diversity

Unite humanity with a living new language

Rule passion — faith — tradition and all things with tempered reason

Protect people and nations with fair laws and just courts

Let all nations rule internally resolving external disputes in a world court

Avoid petty laws and useless officials Balance personal rights with social duties.

Prize truth — beauty — love —seeking harmony with the infinite

Be not a cancer on the earth — leave room for nature — leave room for nature

Note that the last guideline has “leave room for nature” repeated twice on each of the 4 monoliths eight total sides with the exception of the one in Russian, where it looks like they ran out of space. The monoliths have the message in the following languages, moving clockwise around the structure from the north: English, Spanish, Swahili, Hindi, Hebrew, Arabic, ancient Chinese, and Russian.

On the capstone is the message, in Babylonian Cuneiform (north), Classical Greek (east), Sanskrit (south), and Egyptian Hieroglyphs (west), and the stone embedded in the Earth on the wesern exposure provides what is supposed to be an English translation: "Let these be guidestones to an age of reason." It is rumored to be engraved on the top of the capstone but as I am not 20 years old anymore I didn't try to get up there and see!

The guidestones messages and capstone message give a tip of the hat to Thomas Paine and other “radical” authors who espoused these types of rules for life in ages past.

The monument itself is aligned on several astronomical axis, it has a slit for viewing the sunset, a hole to look at the North Star (if you move to the left and squint as the monument is supposedly two degrees off proper placement) and a hole in the capstone that marks noon throughout the year. The width of the monument supposedly aligns with the migration of the moon throughout the year.

The setting sun through the slit

The sight of the setting sun through the aperture provided is quite fetching. Like I said, the North Star (“Celestial North”) is also visible through a hole in the center slab, if you move the left and look carefully through the hole due to a misalignment of the monument. I wasn’t there for noon so I couldn’t verify the noon time affect but I am assured it works well.

Now, tale 2.

As I was wandering around the site video taping and taking pictures an older gentleman and his wife drove up, he got out and his wife waited in the truck. Tall (about 6 foot) and a little heavy although not overly so, he reminded me of several rural Georgia farmers I have met in my wanderings. He told me that he had heard someone had vandalized the stones and wanted to see how badly (you can see what appears, upon close inspection, to be some type of clear polymer resin splashed on two of the stones.) We struck up a conversation and I asked him about the mystery surrounding who had them erected. He asked if I had heard the story and I said I read it on the web. He then stated:

“All of that is bullshit.” And smiled at me. “I worked at the quarry when this was built, Mr. Fendley himself had it built and made up that story” Mr. Fendley is/was the owner of the Pyramid Quarry. “He liked publicity and Lord knows this gave it to him.”

He also said he confronted Mr. Fendley about the monument and “He didn’t deny it, he looked mad, but kind of half smiled at me.”

Seems Joe F. Fenley Sr., Wayne Mullinex (the man who donated the land) and Wyatt C. Martin, President of the Granite City Bank involved with the financing were all Shriners (and therefore Masons) and agreed to do this together.

Now even if you take out having to pay for the granite itself (119 tones give or take) and the cost of the land, the cost of paying the workers to extract, cut, shape and carve the custom sized stones as well as place them is still rather high just for a publicity stunt, however, I wouldn’t put it past some folks. Also, the alignments and other parts of the monument are intriguing, why go to such effort when just the slabs themselves and their message would have been enough?

To add to the message there is supposedly a time capsule buried (or will be buried) under the slab that is on the west side of the monument. The slab explains the monuments message, who supposedly built it and details of the stones themselves. The “six feet under” makes me wonder if it isn’t someone who will be buried, hence the missing dates.

Inscription about the time capsule, notice missing dates.

All in all the stones looked like they were rather hurriedly inscribed because some of the inscriptions overrun the finished part of the slabs, there are translation errors and character errors on some messages and the lack of the repeat on the Russian stone due to running out of space. On the stone showing noon for several different parts of the year, the final marker overruns the finished part of the stone, was this a mistake, or is that marker more (or less) significant than the others?. Also, the North Star viewing hole appears to have been done as two holes drilled to run into each other, unfortunately they are off access to each other giving the hole a curved appearance and the noon hole looks like it was added as an afterthought.

Some have commented that if you wanted people to see the stones why not place them in a more significant location than a rural Georgia county? Well, for one thing, the sunset view and the North Star views probably would be difficult to guarantee in the heart of Atlanta or in areas where development might throw a skyscraper in the way. Besides if this really is a message for post-Armageddon survivors you don’t want it near any ground –zero targets.

Final “noon” marker overruns finished stone (circled in red)

Final “noon” marker overruns finished stone (circled in red)

Now, do the “mistakes” add up to rushed workers or are they deliberate? Are they sending us a message? I’ll leave that to the “DiVinci Code” fans to determine.

So, which tale is the true one? I know I have my opinion although the romantic side of me leans towards the mysterious group of strangers. For those close enough, go see this “America’s Stonehenge” yourself, for those who can’t, look them up on the net. I’ll be posting a bunch of other photos from my visit at my http://www.scubamage.com/ site as soon as I finish processing them, I’ll provide a more substantial link at that time.

Monday, September 29, 2008

Oracle's Data(Warehouse)base Machine

The biggest news at the conference was Larry Ellison’s announcement of the Exadata storage concept and the Oracle Database Machine both developed jointly with HP. These new storage and database devices offer up to 168 terabytes of raw storage with 368 gigabytes of caching and 64 main CPUs in 8 stacked DL 360 G5 servers and each Exadata unit has a HP Proliant DL 180 G5 with dual quadcore CPUs, 8 gigabytes of memory and 12 SAS 300 GB or SATA 1 terabyte drives. The entire HP Oracle Database Machine contains 14 Exadata blocks and 8 – dual quadcore servers in a full configuration. The Exadata blocks can be purchased separately. There are 4-24 port Infiniband switches provided in the Database Machine. The entire device provides a throughput of 10.5 (SATA) to 14 (SAS) GB/second.

Now, each Exadata block can only provide 1 terabyte if the 300 GB drives are utilized and 3.3 terabytes if the 1 terabyte drives are used unless Oracle compression is also used. This space calculation (from Oracle documentation) is based on mirroring of all the drives and subtracting space for logs, undo and temp space. The usual “your mileage may vary” warning applies to this available space. ASM with what appears to be high redundancy storage is being used to manage the drives. So while raw storage appears to be 3.3 TB to 12 TB the actual space that ends up being usable is only 1/3 of those amounts. Each Exadata has 2 – 20 Gigabit Infiniband interfaces. However, the blocks can only support 1 GB per second of output with the SAS configuration and 750 MB per second in the SATA configuration.

The Oracle Database Machine was actually designed for large data warehouses but Larry assured us we could use it for OLTP applications as well. Performance improvements of 10X to 50X if you move your application to the Database Machine are promised. This dramatic improvement over existing data warehouse systems is provided through placing an Oracle provided parallel processing engine on each Exadata building block so instead of passing data blocks, results are returned. How the latency of the drives is being defeated wasn’t fully explained.

The HP Oracle Database Machine must run Oracle11g, 11.1.0.7, RAC and Linux and each Exadata block must have the new Oracle 11g parallel query engine installed. So in a full configuration you are on the tab for a 64 CPU Oracle and RAC license and 112 Oracle parallel query licenses (assuming it is per CPU, if it is per Exadata block then it will be 14) as well as any Grid control licenses you may need. The base cost of the full Database Machine is around $650K which seems quite a bargain for 14-46 terabytes of usable storage and a 64 processor stack, however, you will also need over a million dollars in licenses even with aggressive reductions from your sales representative.

The HP-Oracle Database Machine only works with Oracle databases (just thought I should throw that in.)

Whew! The HP-Oracle database Machine offers quite an impressive array of facts, figures, promises and price tags. It will be interesting to see how this all sorts out over the coming months. Will there be enough profit in the HP-Oracle Database Machine to keep HP interested? Or is this another network computer? For those too young to remember Larry’s last hardware foray was the Network Computer, a device that would replace all the desktops and centralize application and data storage, it failed. [Note: don’t forget about Pillar Data another Ellision investment that would seem to be hurt by this announcement]. I believe this system is designed to help Oracle protect turf from Netezza and promote growth in the analytics market. Targeting the product to OLTP environments is just sloppy marketing as the system will not offer the latency needed in real OLTP transaction intensive shops. These applications do not need parallel queries, they need low latency database writes and reads.

So for nearly 2 million dollars (licenses plus hardware) you get a dedicated Oracle server in a rack with 64 CPUs of central processing and 46 terabytes of usable storage managed by 112 block resident CPUs and a wee bit less than 224 gigabytes of cache area (168 gigabytes of cache were promised after processing overhead was subtracted). However, you must throw away your existing infrastructure, upgrade to Oracle11g and marry your future to the HP Oracle Database Machine to do so.

What might be an alternative? Well, how about keeping your existing hardware, keep your existing licenses, and just purchase solid state disks to supplement your existing technology stack? For that same amount of money you will shortly be able to get the same usable capacity of Texas Memory Systems RamSan devices. By my estimates that will give you 600,000 IOPS, 9 GB/sec bandwidth (using fibre Fibre Channel , more withor Infiniband), 48 terabytes of non-volatile flash storage[S1] , 384 GB of DDR cache and a speed up of 10-50X depending on the query (based on tests against the TPCH data set using disks and the equivalent Ram-San SSD configuration). More importantly, this performance can be delivered with sub-millisecond response time. At Oracle World I was presenting on the importance of latency to Oracle databases. The Exadata is massive and offers great bandwidth but will have nearly awful disk access tedencies due to the massive and slow disk drives included in the system.

Of course, if you don’t need 48 terabytes you can purchase Ram-San SSD technology from 32 gigabytes up to whatever you actually need. Ram-San SSD technology works with all Oracle versions and requires no special licensing or changes to your system. Ram-San uses fibre channel or Infiniband for connection to your infrastructure and looks identical to a disk drive once configured (it takes about 10 minutes.)

Oh, and the Ram-San doesn’t care if you are on Oracle, SQL Server, MySQL, or cousin Joe’s Ozark Mountain special database.

So let’s recap:

Purchase the complex, tied to Oracle with a golden chain, HP-Oracle Database Machine and end up throwing away your existing technology stack, and spend up to 2 million dollars for the privilege, for a speed up of 10x to 50x on data warehouse type queries.

Or

Purchase just as much Ram-San SSD technology as you need ($43K base price for 32 GB mirrored), keep your existing hardware and license structure (or possibly reduce it) and get a 10x to 50x speed up on data warehouse type queries, with the freedom to change databases as you need to.

Call me simple, but I think I see the proper choice.

[S1]Note that the 8TB systems are projected to have 1.5GB/second of bandwidth sustained reads or writes (to Flash). This can be accomplished with four 4Gbit FC ports or two 4x IB ports per unit.

Tuesday, September 16, 2008

Is there a DBA in the House?

All of the problem solving that occurs on House reminds me of trouble shooting in the Oracle world. In many cases there are a number of symptoms with database problems and some of them are contradictory just as with problems in the human health areas. Oh, and everybody lies. “No, there weren’t any changes”, “No, nothing is different between these two test runs”, ”Yes, we used the same data/transactions/parameters”.

In almost every episode of House they go through at least three different “cures” before they find the real problem and solution. Many times in the Oracle universe we apply a fix for a problem, only to find it wasn’t really the issue, or, in fixing it we transfer the problem to another area of the database. Another similarity is that many times House and his team will take a shotgun approach when there isn’t a clear solution, applying two or more “cures” at the same time, much like a DBA will apply multiple fixes in a single pass, thus not really knowing what was fixed but just breathing a sigh of relief when performance improves.

I think the character portrayed as Dr. House would make a great DBA, but somehow I can’t see folks glued to their TV screens hoping that next index will fix the query…

Thursday, September 04, 2008

SSD is Green Technology

But just how much can be saved? In comparisons to state of the art disk based systems (sorry, I can’t mention the company we compared to) at 25K IOPS, 50K IOPS and 100K IOPS with redundancy, SSD based technology saved from a low of $27K per year at 6 terabytes of storage and 25K IOPS to a high of $120K per year at 100K IOPS and 2 terabytes of storage using basic electrical and cooling estimation methods. Using methods documented in an APC whitepaper the cost savings varied from $24K/yr to $72K/yr for the same range. The electrical cost utilized was 9.67 cents per kilowatt hour (average commercial rate across the USA for the last 12 months) and cooling costs were calculated at twice the electrical costs based on data from standard HVAC cost sources. It was also assumed that the disks were in their own enclosures separate from the servers while the SSD could be placed into the same racks as the servers. For rack space calculations it was assumed 34U of a 42U rack was available for the SSD and its required support equipment leaving 8U for the servers/blades.

Even figuring in the initial cost difference, the SSD technology paid for itself before the first year was over in all IOPS and terabyte ranges calculated. In fact, based on values utilized at the storage performance council website and the tpc.org website for a typically configure SAN from the manufacturer used in the study, even the cost for the SSD was less for most configurations in the 25K-100K IOPS range.

Obviously, from a green technology standpoint SSD technology (specifically the RamSan 500) provides directly measurable benefits. When the benefits from direct electrical, space and cooling cost savings are combined with the greater performance benefits the decision to purchase SSD technology should be a no brainer.

Sunday, August 24, 2008

A Tale of Two Databases

In my own laboratory (no, I don’t have an Igor, but he would come in handy to help dispose of dead computers now and then) I am running a two-node RAC cluster with each cluster node being a single 3 gigahertz 32 bit CPU with hyperthreading and 3 gigabytes of memory. The cluster interconnect is a single gigE Ethernet. As to storage, I utilize two JBOD arrays, a NexStor 18F and a NexStor 8F each fully loaded with 72 gigabyte 10K Seagate SCSI drive and connected to the servers via a Brocade 2250 with dual QLA2200 1gb HBAs on each server. The arrays themselves are connected through single 1 gb fibre channel connections. Oh, it is also running RedHat 4.0 Linux.

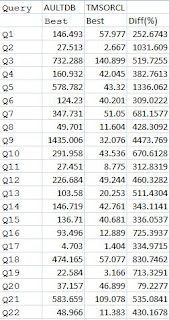

On the system in the remote lab, let’s call it TMSORCL for ease of identification, I have 4-3.6 Ghz CPUS, 4-2 port QLA2642 4 Gb HBAs (I used 1) and 8 gigabytes of memory. I have Oracle10g 10.1.0.2 installed, on the home lab (let’s call it AULTDB) I have Oracle11g, 11.1.0.2 installed.

AULTDB is utilizing ASM on two diskgroups. One diskgroup consists of 16 drives, 8 on the 18F and 8 on the 8F using normal redundancy and failgroups, the other diskgroup uses 8 drives on the 8f and uses external redundancy. I have placed the application data files and index files on the datagroup with the 16 drives and the rest (system, temp, user, undotbs, redo logs) on the 8 disk externally redundant datagroup. Using ORION (Oracle’s disk IO simulator) I was able to achieve nearly 6000 IOPS using 24 drives, so I hope that I can get near that using this configuration.

TMSORCL is only using the RAMSAN 400 through its 4 gb fibre channel connection for all database files. The RAMSAN should be able to produce enough IOPS to provide at least 100,000 through the single HBA (if it doesn’t saturate that is.)

Using Benchmark Factory from Quest Software I created two identical databases (you guessed it, TMSORCL and AULTDB.) These are TPCH type environments with a scale factor of 18, the largest I could support and still have enough temporary space to build indexes and run queries.

Now, some may be saying that I have set up an apples to oranges environment for comparison, and they would be correct, however, many folks will be facing just such a choice very soon, that is, to stick with an Oracle10g environment or upgrade to an 11g environment. Another question that many folks have, should I go to RAC? So this test is not as useless as you may have first thought.

I set up both TMSORCL and AULTDB to be as near in effective size (memory settings wise) allowing that AULTDB was spread across two 3 gigabyte memory areas allowing for a total memory footprint of about 3-4 GB, I set up the TMSORCL environment to have a maximum size of 4 GB with a target of 3 GB for memory.

I had some interesting problems with the number 5 query in the TPCH query set until I added a few additional indexes, it kept blowing out the temporary tablespace even though I had it as large as 50 gigabytes. If you want the script for the additional indexes, email me. I also had issues with parallel query slaves not releasing their temporary areas. Anyway, after adding the indexes and incorporating periodic database restarts into the test régime I was able to complete multiple full TPCH power runs on each machine (just the single user stream 0 power run for now).

So, before we get to the results, let’s recap:

AULTDB – 32 bit RedHat 4 Linux RAC environment with two single 3 Ghtz CPUS running hyperthreaded to simulate 4 CPU total in 6 gigabytes of memory utilizing 4-1gb QLA2200 HBAs to access 24-10K 72 gigabyte drives using ASM. Effective SGA 4 gigabytes.

TMSORCL – 64 bit RedHat 4.0 Linux single server with 4 3.6 Ghtz CPUS with 8 gigabytes of memory utilizing 1-4 gb HBA port to access a single 128 GB RAMSAN 400. Effective SGA constrained to 4 gigabytes.

I ran the TPCH on each database (after getting stable runs) a total of 4 times. I will use the best run, as measured by total time to complete the 22 queries, for each database for the comparison runs. For AULTDB the best time to complete the run was 1:37:55 (One hour, thirty seven minutes and fifty-five seconds.) For TMSORCL the best time was 0:15:15 (zero hours, fifteen minutes and 15 seconds.) So on just raw, total time elapsed for identical data volumes, identical data contents and identical queries the TMSORCL database completed the runs 6.42 times faster (642%). The actual query timings in seconds are shown in the following chart. Based on summing the given query times the performance improvement factor from AULTDB to TMSORCL is 6.68 or 668% faster.

As you can see, TMSORCL beat out AULTDB on all queries with a range of 79% up to a whopping 4,474% improvement being shown based on the individual query times in seconds.

During the tests I monitored vmstat output at 5 second intervals, at no time did run queue length get over 3 and IO wait was less than 5% on both servers. This indicates that the IO subsystem never became over burdened, which of course was more of a concern with AULTDB rather than TMSORCL.

Now the TPCH benchmark is heavy on IOPS, so we would expect the database using the RAMSAN to perform better, and that is in fact what we are seeing, in spite of only having a single HBA and being on an older, less performing version of Oracle. So what conclusions can we draw from this test? Well, there are several:

For IOP heavy environments RAMSAN technology can improve performance by up to 700% against a JBOD array properly sized for the same application, depending on number and type of queries.

Use of a RAMSAN can delay moving to larger, more expensive sets of hardware or software if the concern is IO performance related.

Now there are several technologies out there that offer query acceleration, most of them place a large data cache in front of the disks to “virtualize” the data into what are essentially memory disks. The problem with these various technologies (including TimesTen from Oracle) is that there are coding issues, placement issues (what gets cached and what is left out?) and management issues, for example, with TimesTen there are logging and backup issues to contend with. In addition, utilities that use the hosts memory such as TimesTen add CPU as well as memory burden to what is probably an overloaded system.

What issues did I deal with using RAMSAN? Well, using the provided management interface GUI (via a web browser) I configured two logical units (LUNS), assigned them to the HBA talking to my Linux host and then refreshed the SCSI interface to see the LUNS. I then created a single EXT3 partition on each LUN and pointed the database creation with DBCA at those LUNs. Essentially the same exact things you would do with a disk you had just assigned to the system. The RAMSAN LUNs are treated exactly as you would a normal disk LUN (well, you have to grant execute permission to the owner, but other than that…) Now, if you don’t place the entire database on the RAMSAN then you have to make the choice of what files to place there, usually a look at a Statspack or AWR report will head you in the correct direction.

An interesting artifact from the test occurred on both systems, after a couple of repetitive runs the times would degrade on several queries, if I restarted the database, the times would return to near the previous good values. This artifact probably points to temporary space or undo tablespace cleanup and management issues.

I next intend to run some TPCC and maybe if I can get the needed infrastructure in place, a TPCE on each system, watch here for the results.

Saturday, July 19, 2008

Atoms to Plowshears (Literally and figuratively)

After the conference I had some time to kill so I decided to visit Magnuson State Park. It wasn’t an arbitrary decision just selected at random from the Seattle area map, I had heard (and seen some pictures) of a sculpture there and decided to visit there if I could as a result. My first set of directions actually took me to the artist’s house, I didn’t stop in and say hi, but just backtracked and went to the park.

Partial Shot of Sculpture

Partial Shot of Sculpture In the picture shown above, if you are not sure what you are looking at, let me explain. From the 1950’s to the current date the USA has been building and using Nuclear Submarines, starting with the USS Nautilus, SSN 571 commissioned in 1954. Both search and destroy (fast attack) and stealth missile deployment (Ballistic Missiles) submarines utilize stern planes and sail planes (the “sail” is what landlubbers would call the conning tower.) The sail planes are also called the dive planes as they are used to cause the submarine to dive and surface when it is neutrally buoyant.

Seattle Fins: SSN 669 Seahorse, SSBN 641 Simon Bolivar, SSN 652 Puffer, SSN 615 Gato, SSBN 620 John Adams, SSN 595 Plunger, SSN 638 Whale, SSN 667 Bergall, SSN 673 Flying Fish, SSN 597 Tullibee, SSN 650 Pargo, SSN 662 Gurnard.

Miami Fins: Sea Devil SSN 664, Pogy SSN 647, Sand Lance SSN 660, Pintado SSN 672, Trepang SSN 674, Billfish SSN 676, Archerfish SSN 678, Tunny SSN 682, Von Steuben SSBN 632, Sculpin SSN 590, Cavalla SSN 684.

I served on two nuclear submarines during the period 1976-1979, the USS John Adams, SSBN 620 and the USS Bergall, SSN 667, so you can see my interest in the Seattle sculpture. The SSBN on the Adams number means she was a ballistic missile boat, we carried up to 16 Poseidon missiles with MIRV warheads (multiple independent re-entry vehicle, meaning each missile of the Poseidon class could hit multiple targets) with the nuclear capability that exceeded the explosive power of all the munitions used in WWII. All this was used to carry out the MAD (mutually assured destruction) doctrine between the USA, USSR and at times Communist (Red) China, although the main targets were predominantly in the USSR. The SSN means the Bergall was a fast attack submarine used to hunt and kill other ships, including hostile submarines.

The MAD concept was that the SSBN type submarines, being undetectable, would be unstoppable launch platforms that would be used to respond to any nuclear aggression from anywhere in the world. Thus assuring we could utterly destroy Russian civilization should they launch a first attack that succeeded in taking out our land based missile systems. The Russians spent a great deal of time, money and resources trying to find ways to beat the SSBN submarines, in no small part they were one of the key technologies that kept the Russians and Chinese from launching a first strike during the worst part of the cold war.

ComSubLAnt (Commander Submarine Atlantic) could communicate with us using radio and LFT (Low Frequency Transmissions.) They kept the encrypted traffic going 24X7 replacing any actual command traffic with 15 word family grams, news and other items to not allow the Russians the ability to sense something was happening by seeing increased communications traffic. Each sailor was only allowed a limited number of family grams per patrol, no reverse communication, from the sailors back to the families was allowed. A patrol lasted 3 months with most of that spent underwater on patrol and the balance in such sun-fun spots as Holy Loch, Scotland repairing what the other crew broke on their patrol. The SSBNs had two crews, the Golds and Blues, I was on the gold crew. You usually spent about 70-80 days underwater with no fresh air, no outside views and no females! Your biggest enemies where boredom and doing qualifications, you didn’t think about the hundreds of pounds per square inch of pressure that were striving to snuff out your life every second of every day while you were on patrol or you would go mad.

We were the warriors of the cold war. The cold war was officially over (at least most felt it was) when the Berlin wall was taken down in 1989, the submarine fleet was as much responsible for that as any president. We were away 3 months out of every 6 from our families, for these patrols, I did 5 patrols and a DASO run for a total of 18 months out of the 33 I spent on the Adams. At just about any time during those 18 months a worn seal, a broken valve, a busted pipe could have killed us all, as it did for the sailors on the two nuclear submarines that didn’t come back, the USS Thresher, SSN 593 and the USS Scorpion, SSN 589. Believe me, listening to the pings and squeals as we went to test and one time to crush depth was a bit unnerving when you realized how much pressure it took to do that to several inches of stainless steel pressure hull. The Russians lost several submarines during that time as well and now most of their fleet lies in ruins silently rusting away at the piers in Vladivostok and other Russian ports.

As I stood there and placed my hand against the only surviving part of the submarine that had guarded my (and your) life both directly when I was aboard her and indirectly through the MAD concept when I wasn’t I couldn’t help but feel a bit nostalgic and melancholy that such a fine ship met such an ignoble end as becoming feed stock for John Deere tractors except for one sail plane in this sculpture garden. Of course the transition from a ship of war to farm implements maybe has greater cosmic import that I realize. The Bergall had both of her sail planes here, but since I only spent a few months and never went to sea on her, I didn’t feel the connection I did with the Adams.

As I wandered the sculpture garden taking pictures I heard and watched a group of children playing on a nearby hill. Later from that same hill I watched them walk down through the sculpture garden toward the beach and right past the last intact piece of the USS John Adams. I wondered if any of them truly understood what that piece of steel really meant? Of course maybe it’s true purpose was so that they never again would have to live under the threat of nuclear annihilation of the entire planet. I hope someone explains it to them, so that the meaning is not forgotten.

The Author beside the USS John Adams SSBN 620 Sail Plane

Wednesday, July 02, 2008

Lies, Damn Lies and SSD Technology

Let’s look at some of the highlights:

1. Solid state drive technology is very expensive

2. Solid state devices are best when directly attached to the internal bus architecture

3. Solid state drives will only be niche players

4. You can get the same IO rate from disks as from SSD

First, the myth that solid state drives are expensive was, like many myths involving Oracle and computers, true at one time, however, times change. The huge leap in demand for flash memory with the advent of I-pods, digital cameras and video recorders has created a memory glut. You can get a 4 gigabyte flash memory stick or card for under a hundred dollars for your camera or other flash device. In fact memory prices promise to plunge even farther as mass production techniques and miniaturization technology improves. The cost for a gigabyte of enterprise class disk storage is around $84 at last count, for the most current version of the Texas Memory System RAMSAN SSD technology, using flash memory and regular memory, the cost is around $100 per gigabyte, with further decreases in memory costs, RAMSAN SSD prices will fall even further.

Second, in a recent article a producer of both disk and solid state technology seemed to indicate it works best when hooked directly into the internal bus for the computer and really wasn’t efficient when attached as a SAN would be attached. I am not sure where he is getting his information (other than his company is trying to shoe-horn solid state drive technology into their existing SAN infrastructure) but it has been my experience that rarely if ever do users flood the fibre channels, they may overload a couple of the disk drives, but generally the SAN connections are not the source of the bottleneck when it comes to SAN technology. Using standard fibre channel connections and standard host bus adapters Texas memory Systems achieves over 400,000 IOPS from a single 4U RAMSAN SSD. To get the equivalent IOPS using regular disk technology you would need over 6000 or more individual disk drives, the racks to hold them and the controllers to control them, not to mention the air conditioning and electrical power needed for that many disks.

Next, solid state drives will only be niche players, this is a ridiculous statement. Most clients of RAMSAN SSD technology use them just as they would disk arrays. The RAMSAN SSD technology will replace disks as we know it in the near future and disks will be relegated to second tier storage and backup duties, replacing tapes. Many experts are talking of the tier 0 level of storage and specifically mentioning SSD when they do so. When you can place a single 4U sized RAMSAN SSD into your system and replace literally hundreds or thousands of disks the idea that they will only be niche devices is foolish. This is especially true when you consider the decreasing costs, the ease of administration and the performance gains that you get when SSD technology is properly deployed.

Finally, the myth that with disks you can get the same IOPS as with SSD. Yes, you can, however, you would need X/(IOPS/disk) number of disks where X is the desired IOPS to achieve it, double that number for RAID10 or RAID01. Even high speed 15K drives can only deliver around 100 to 130 IOPS per second of random reads due to the mechanical nature of disk drives, as the late Scotty on the Federation Starship Enterprise used to say (about every other episode): “Ya cannot change the laws of physics.” Disks, without prohibitive cooling technologies, cannot exceed certain maximum rotational speeds, read heads, mounted on mechanical arms can only move so fast and the magnetic traces can only be packed so close on the disk surface. To get 400,000 IOPS you would need at least (400,000/130)*2= 6153 drives in a RAID10 array. At 18 drives per tray that is 341 trays of disk drives at 8 trays per rack that is almost 43 racks needed to hold the drives. Now, even with the largest caches available you still require anywhere from a millisecond to several (up to 5 with minimal loads, higher with large loads or more than single block reads) milliseconds to do each IO, this latency will always be there in a disk based system, the latency on SSD based systems such as RAMSAN are in the hundreds of nanoseconds range (fractional milliseconds).

So, what have we determined? We have found that SSD technology is comparable in cost with enterprise level disk systems and will soon beat the cost of enterprise level disk systems. We have also seen that SSD technology when properly designed and implemented (not shoe-horned into a disk-based SAN) will fulfill the promise of fibre technology and allow use of the bandwidth currently squandered by disk technology. We have also seen that far from being a niche technology, SSD is becoming the tier 0 storage for many companies and will soon supplant disks as the primary storage medium in many applications. Finally, while it is possible to achieve the same level of IOPS using disk technology that SSD technology provides, it would be cost prohibitive to do so, and, even if you did achieve the same level of IOPS, each IO would still be subject to the same disk based latencies.

I am not afraid to say it: SSD technology is here, it is ready for prime time and it is only a matter of time before disks are relegated to second tier storage. Disks are dead, they just don’t know it yet.

Friday, June 20, 2008

Is Hydrogen Really Green?

Since there is relatively no free hydrogen in nature, hydrogen has to be produced through electro-chemical or catalytic means. The most common means of hydrogen production is the electrolysis of common water, you take two water molecules (2-H2O) and combine them with a jolt of electricity and you get 2 hydrogen molecules (2H2) and one oxygen molecule (O2). In a loss-less system you could then take the hydrogen and recombine it with the oxygen and get back the energy used to separate the molecules back in the form of heat, (the reaction is exothermic, as in gives off heat) or in the case of a fuel cell electricity, and have a waste product of pure water. However, there is no such thing as a loss-less conversion going either way so it takes more energy to produce hydrogen gas than you get from burning it or using it to produce electricity in a fuel cell.

Hydrogen is much less dense (in liquid form) than gasoline, this means that while hydrogen provides more btu of energy per pound than gasoline, a pound of hydrogen takes up more volume. This density difference means that to take advantage of the increased BTU per pound you have to burn or convert a larger volume of hydrogen. How about a 60 gallon tank for your SUV? Hydrogen also must be kept compressed and/or insulated to prevent losses. Hydrogen tends to cause hydrogen embrittlement of most metals so long term storage is also an issue. Liquid natural gas lines could possibly be used to transport the liquefied hydrogen, however, better insulation and the embrittlement issues would have to be addressed before the existing infrastructure could be used safely.

One promising hydrogen storage technology uses metal hydrides such as zirconium hydride that allow storage of hydrogen in interstitial sites in the crystal lattice, some tests show that storage densities exceeding liquid densities by several fold are possible. The use of metal hydride storage would put the fuel tank back at the current size in your SUV and would provide added safety since the hydrogen is not in liquid or gas form.

It should be obvious that using fossil fuels such as natural gas, oil, or coal to generate electricity to create hydrogen would be a huge mistake. The amount of carbon dioxide (CO2), the major greenhouse gas, which would be created from using any fossil fuels to produce hydrogen would cause far more damage from CO2 emissions than any benefits gained from using the hydrogen thus produced. However, it waits to be seen if some enterprising third-world country (no doubt financed by mainline energy companies) doesn’t use massive coal burning to produce hydrogen for sale to the more industrialized countries.

This leaves us with wind, solar, tidal or nuclear power to provide the needed energy to produce hydrogen in sufficient quantities to make it a viable energy source. What are the economic considerations of each of these energy sources?

Use of wind power

On the surface wind power looks good. You put up a tower (or two, or a hundred) with a wind generator and get electricity and dump the electricity into an electrolytic cell that produces hydrogen. Of course some of the electricity needs to go into compressors to store the gaseous hydrogen, some needs to go into pumping and purifying the water being fed into the electrolytic cell. At current manufacturing costs electricity from wind runs 4-6 cents per kilowatt hour, when the wind blows, it isn’t raining to hard, freezing or being repaired because of lightening strikes. Wind also ties up huge amounts of real estate, causes noise pollution and has reliability issues. Plus to produce the nearly 250 gigawatts of energy needed to produce the amount of hydrogen gas to support just the needs of the USA to replace fossil fuels in transportation alone would require 12,500 2 megawatt wind turbines and a mere 5000 of the new 5 megawatt mega-turbines. Want one over the top of your house?

Use of Solar Power

Let’s look at solar power, it is quiet, produces no waste, perfect right? Not quite. Even if the new high efficiency cells pan out where we double or quadruple the efficiency of existing cells by using new technologies (to 40-60 percent conversion efficiency) we will still only have a cost of 8-10 cents per kw-H, more expensive than wind generation technology. At a solar constant of 1,395 watts per acre and a 60% conversion efficiency yields a back of the envelope calculation of 837 watts per acre. To provide the 250 gigawatts using solar we would need to clear 30 million acres of land and put in high efficiency solar panels, how does that grab you?

Use of Nuclear Power

Nuclear power can produce immense amounts of energy while requiring only small amounts of space. The 250 gigawatts required for the hydrogen gas production would require 150-160 new reactors to be built. At 100 acres per plant this is only 16,000 acres of land, as a comparison, Ted Turner’s ranch properties are estimated at over 1.9 million acres. As to the nuclear waste issues, the new designs for reactors promise to reduce waste and make better use of recycling of fuel. This won’t completely eliminate the nuclear waste problem but may make it more manageable. At 11.1 to 14.5 cents per kw-H it is currently one of the more expensive options until you consider the environmental costs of other technologies. However, using the heat from the nuclear process to facilitate steam methane reforming, biomass gasification or coal gasification to produce hydrogen more efficiently we could reduce the cost, increase the output and reduce the number of needed nuclear plants thus further mitigating the nuclear waste problem.

Summary

So, before we all leap upon the hydrogen technology bandwagon we need to step back and examine the real costs and the real technologies needed to make it a reality. Is hydrogen really a green technology? As with all things technology the answer is a fully qualified maybe.

Friday, May 30, 2008

Opportunities Part Two

For those not sure what Texas Memory Systems does, check out their website at http://www.texmemsys.com/. One of my duties will be to manage/monitor the StatsPack Analyzer website at http://www.statspackanalyzer.com/, if you have a statspack (or AWR) report you want analyzed, log on and upload it! Also, swing by the forums and have a go at improving our rules for statspack/AWR evaluation. We want to improve the Statspack Analyzer application and we value your feedback.

So, I was actually unemployed for 15 days (11 workdays) but I did have the offer within 36 hours a new record for me. I can’t imagine how I would feel being out of work for several weeks or months as 15 days was disturbing enough (after all, how much Oprah or Dr. Phil can one person take?) To all of those job hunting right now, stick with it, good luck and I hope you have as good a luck as I did in my search.

I am really looking forward to getting in the new equipment down in the dungeon where I can torture it to my hearts content with various tests, benchmarks and other procedures my devious mind can come up, I am looking forward to also publishing the results so everyone can see how really amazing is this SSD technology. Imagine a 146 times improvement in query performance. How about virtually no latency for redo log operations? Take a look at some of the client histories and white papers on the http://www.texmemsys.com/ to see real data.

Anyway, it is great to be part of the working masses once again, unemployment is hard work!

Sunday, May 18, 2008

Opportunity

During my 10 years as a Nuclear Chemist I watched the Nuclear industry grow from a growth industry, to a stable, to a declining one. Now of course with oil prices rising and CO/CO2 emissions on everyone’s mind the Nuclear industry is making a come back. No, I am not returning to the Nuclear industry as my skill set is nearly 20 years out of date. However, for the first time in 18 years I have been noticing certain indicators in the Oracle DBA marketplace that show it may be time for a change.

The indicators of course deal with the increased automation of the Oracle DBA job by Oracle coupled with the large supply of (at least on the low end) DBA services as outsource resources. It is difficult to compete with DBA resources that are happy to receive a small fraction of the salary you need to live on in the USA. I predict that the DBA job for Oracle will cease to exist as we know it within 3-5 years. Perhaps rather than being part of the large out flux of skilled but overpriced Oracle talent at this future date it is time to evaluate where you want to be in 3-5 years.

You may know (or you may not as they are keeping things rather under raps) Quest just went through a large round of layoffs in their Oracle and database areas. Yes, I was caught in the lay off and was officially put in the ranks of the unemployed on May 15, 2008. Perhaps it is a signal that it is time to move to another area of expertise. Don’t worry, I am landing on my feet and already have an excellent opportunity I am considering and unless something else shows up that is absolutely stellar, I will probably take it. The opportunity provides a path to stop feeding from the Oracle trough and move into an area that is more future-proof. I’ll keep you posted as I move forward with this new prospect.

Of course this is a record for me, in 35 years of working it is the longest I have gone without a job (so far 2 full work days) however, I did have the offer within 36 hours of being laid off. So, I plan to use this as an opportunity to step back and really consider where the computer industry is going and look to a position that will enable me to make full use of the skills I have acquired while moving forward into new and exciting areas. Opportunity is knocking, I think I’ll answer.

Wednesday, April 02, 2008

If you aren't part of the Solution, you are part of the Precipitate...

In my last blog I basically stated that the western nations could do little to reduce green house gas emissions and that even if we (we as the old established, stable nations) completely eliminated green house emissions that it would make virtually no difference in the long run.

So, what can we do? Well let me list a few items first.

Point 1: Melting of polar/glacial ice is reducing the albedo of the Earth (decreasing our reflectivity) because when the white snow/ice melts it is being replaced by dirt/water which absorb infrared and other energies rather than reflecting it, producing heat.

Point 2: The replacing of trees with manmade, usually dark structures is resulting in urban areas absorbing more heat

Point 3: The highest use of energy comes from air conditioning, heating, and heating of water in most homes.

Point 4: The major cause of global warming is the effect of the nearly circular orbit in this phase of the Earths 100,000 year cyclic dance with the sun resulting in the highest level of solar input to the eco-system in 100,000 years.

Point 5: Burning of fossil fuels for power generation and transportation adds millions of tons of green house gases to the environment.

Point 6: Developing third world countries and China and India have little incentive to reduce emissions.

Ok, what can be done about point 1? Well, we could paint the ground reflective white where the ice/snow has melted and construct large floating reflective surfaces in place of the missing ice, of course this would have to be done over and over again as the calving ice flows smashed down. In addition the angle that the Sun’s rays strike at the poles makes this very inefficient. So, what else could we do?

Hmmm, how about a tax credit for everyone in the USA that is willing to have their dark roofs painted with reflective paint? Since the sunlight reaching the USA (for the most part) is at a more oblique angle than the polar sunlight we would get more efficient reflection. This would also reduce the internal temperature of the houses reducing the cooling loads in the summer months, perhaps something could be done with high tech coatings that, when the temperature dropped below a certain level, these coatings would absorb heat? Hmmm…this also covers some of point 2.

Alright what about point 2? Require builders to not be able to take building sites to grade over 100 percent of the site. At least in Atlanta the first thing builders do to a new subdivision is knock down all of the trees, bushes and grass and scrape away the top inches of topsoil so they can “clear to grade”. Make builders have to justify every tree they remove. Give huge tax credits to “green” builders who build off the grid homes, build underground homes and homes embedded in hillsides. Make it worth their while to not knock all the vegetation to flinders when they build new homes. Penalize them heavily if they do clear to grade. Use of passive solar for water heating, solar panels, and other energy saving techniques should also give tax benefits to those who pursue them.

On point 3, use of the solutions for point 1 and 2 should handle most if not all of point 3. Use of proper passive solar designs can help heat the home, heat hot water and provide for cooling through proper use of convection flow.

On point 4, Ok, this one is tough. However, let me delve into my engineer, and radiation technologist background. The actual percentage of the suns energy that reaches us is quite small. By obscuring even a small (relatively speaking) percentage of this radiation nearer the source it could make a large difference in temperature rises on Earth. As you get nearer the sun the amount of area needed to be actually blocked becomes quite small (a small percentage of the Earths diameter actually.)

Think of a flashlight beam, the width of the beam at the flashlight head is much smaller than if you project the light onto a building 100 feet away. Now, if we assume the diameter of the Sun is equal to the diameter of that flashlight lens, the Earths diameter is 1/110 of that diameter. So, for ease of calculation let’s make the diameter of the lens 11 inches (a really big light!) in this case, the Earth would be roughly 1/10 of an inch in diameter. Now, place the 1/10 of an inch diameter Earth 28 feet away, how much of the flashlight beam is intersecting our 1/10 of an inch Earth? Not a whole heck of a lot, this is equivalent to the actual amount of Solar energy we get out of the ½ of the Sun’s total output (remember, half of the Sun’s output gets sent the other way.)

Everyone has seen or at least heard of total solar eclipses. A solar eclipse happens when the moon (roughly 1/4 the size of Earth) passes between us and the Sun. The moon completely covers the Sun’s disk when this happens. So, assuming that we wanted to block 2 percent of the Solar input we would need a disk roughly the same distance from the Earth as the moon is that is 1/50th the size of the Moon (21 miles in diameter). If we move it out to twice as far as the moons orbit this drops to 10.5 miles in diameter, at 4 times as far, to 5.25 miles in diameter and so forth and so on.

It would be a relatively stupid satellite, only needing to keep itself relatively stationary directly between the Earth and the Sun in a solar orbit, minimal thrusters to combat light pressure and do station keeping. Manufacture it out of used aluminum cans. At only an inch thick, that would require a little more than 685 tons of aluminum (or, make it inflatable out of mylar only a couple of millimeters thick…) you would be surprised how fast temperatures would go down. Build it with shutters so we could fine tune how much we let through, make it solar powered or use the entire thing as a parabolic disk to run a thermionic generator to provide the power to run it…When we didn’t need it anymore (in a couple of hundred years) nuke it into oblivion to restore the solar output, shoot, build in a self destruct after 100 or so years or just turn it edge wise or push it off solar axis in orbit using it’s thrusters.

On point 5, give big tax breaks for companies that show they really are going green. Utilize nuclear energy. Give breaks to companies that produce “green” hydrogen and breaks to consumers who utilize hydrogen burning cars.

For point 6, pay subsistence farmers to not cut down the rain forest. Provide food from all that we waste to feed them and their families. Only give foreign aid to countries that can prove they are doing everything possible to develop “green” technology. Put stiff import trade embargoes on anything that is not produced using green technologies world wide.

Just a few suggestions, and believe it or not, all of them doable and all of them would provide positive and lasting results. Let’s stop gnashing our teeth and solve the issues that we can.

Friday, March 14, 2008

Global Warming

But all of the gloom and doom got me thinking. I’ve seen the same reports most of you have, and probably a couple you haven’t, I am sure you can say you’ve seen reports I haven’t. Anyway, mashing it all together my mind came up with a few salient points.

Population of China: 1,321,851,888

Population of India: 1,129,866,154

Population of USA: 301,139,947

Population of Europe: 788,000,000

Population of South America: 371,000,000

Population of Russia 141,000,000

Others: 2.5 billion

China and India are charging into an economic growth unprecedented in history. This growth will mean industry, power production, cars, trucks, etc. all on a scale we have never seen.

Even if USA and Europe drop their carbon and other emissions to zero immediately I seriously doubt China, India and South America will go back to the primitive conditions they have striven to climb out of over the last decade or two. So, unless we are willing to force stagnation and no growth on China, India, South America and everyone else who falls into the “third world” category there is precious little we can do about humans global warming contributions.

Of course there are computer models that show that if all human greenhouse gases stopped dead tomorrow, it would make maybe a half a degree or less difference over the next 50 years. The major problem is that the Earths orbit is fairly circular right now, meaning that the solar energy being input into the Earths ecosystem is at a maximum. This happens on a grand cycle of about 100,000 years as the orbit goes from elliptical to circular and back again and as the Earth “nutates” on its access.

One of the well meaning scientists showed a chart of CO2 levels and temperature over the last several thousand years which clearly showed the rise in CO2 levels corresponds to a rise in temperature, as has happened over and over again as far back as can be determined! This means this cycle has been happening since before the industrial revolution, heck, before the agricultural revolution, before the stone age. In short, it is a natural cycle. To my slightly trained eyes the curves looked nearly identical right up to the most current one. Of course he immediately zoomed in the on the most current and blamed it all on man.

The graphic above shows the CO2 curve I am talking about, it shows the CO2 concentrations as charted at Vostok, Antarctica from ice cores. Notice a sudden jump in CO2 about every 100 thousand years (some say corresponding to a long solar cycle where we reach the closest we ever get to the sun.) You can also see we are right on schedule for a sudden jump and are right on path. Of course what usually happens after this cycle is an ice age (are you ready for global cooling?) The graph is the current time on the far left going to 400,000 years ago on the far right. At the low point in the current graph the city recently found submerged off of India, the one off of Cuba and the carvings off of Japan were thriving on what was the sea shore. No telling what other archeological marvels are sitting on the old sea coast at about 120-160 feet deep along the continental shelves.

In fact the largest jumps in CO2 happened well before man was doing anything other than scraping the ground with sticks in most places to plant maze. Now I suppose I could really go off the deep end and propose that these 100 thousand year cycles correspond to previous peaks in heretofore unknown civilizations that because of glaciations and natural decay we have no clue about, but I am afraid the fossil record doesn’t support that.

To think us humans are the sole agents of change in all of this is rather egotistical don’t you think? Especially ironic is that Mars, the moons of Jupiter and Saturn are all experiencing their own global warming, I suppose we are responsible for their problems as well.

Friday, February 01, 2008

Are Humans Intelligent Enough for Religion?

Islam used to be the most enlightened religion of its day, encouraging learning, thinking and acceptance, now I suspect its Phophet wouldn’t recognize it. The atrocities done in the name of Christianity over the centuries are many, as are those being done right now both by and to Christians. All of this raises my original question in the title of this blog: Are humans intelligent enough for religion?

It seems humans in general can’t help twisting even the most enlightened teachings to meet their own twisted logic. Of course more at fault are the pathetic followers who swallow teachings of monsters as whole and good even when in their heart of hearts (one hopes) they know it is drivel.

The latest example is the story of Sayed Pervez Kambaksh, a student in Afghanistan who shared an article he had read about women’s rights with some friends, was turned in, and now faces a death sentence. Yes, that is right, a death sentence, for daring to read and show his friends an article on women’s rights. If you find this as wrong as I do, sign the digital petition at www.independent.co.uk/petition maybe they will listen if enough of the world stands up to them.

Prove that we are smart enough for religion, take it back from those who twist it to their own means.

Wednesday, January 02, 2008

Everything I Really Needed I learned from Twilight Zone

Many places and people where never exposed to Twilight Zone. For those not aware of Twilight Zone it was a 60's era TV series hosted (and sometimes written) by a man named Rod Serling. Rod Serling grew up in Binghamton, New York, not that that has a lot to do with it, but it is an interesting bit of trivia (and every small "idellic" town he used in his stories was based on it.) Anyway, the stories usually took one idea and took that idea to its maximum execution (For example: What if there was a war and everyone died but a single amn and woman...on opposite sides of course.) Each show was designed to make you think, as well as entertain.

Later in the 60's came The Outer Limits, another show that wanted to make you think. Between them they stired the neurons in my young, forming mind around a bit making quite a few interesting connections and forming my immature sense of right and wrond and sense of humor into what they are today. Many of the lessons in the shows are very important.

1. If you don't know what it does, don't mess with it

2. Treat others as you wish to be treated

3. Tolerate new ideas

4. Tell the truth as often as possible

5. Just because something is different doesn't make it bad

6. God might be the next person you talk to

7. Respect everyone; man, woman, child, or alien from Beta Lauri

Maybe if everyone was exposed to as many new ideas and thoughts as these shows pumped into my young mind we wouldn't have as many issues as we do now. If we all could practice moderation in word, thought and deed, wouldn't it make the world a bit easier to deal with?

Anyway, just some musing on this, the first day of 2008. By trhe way, I have been upgrading my picture galleries on http://www.scubamage.com/, stop by and have a peek.

Happy New year to everyone, may this be the year you have been waiting for!