I see quite a few questions about creating a crosstab table. While working with a good friend, Gary Withrow, who works for Santa Cruz County, California, he showed me a great technique for doing crosstabs that he has given me permission to share. Essentially it involves using the capability of embedding expressions into the COUNT or other functions available in Oracle.

In 9i and above, of particular use in generating crosstabs is the CASE structure, for example:

-- Crosstab of owner and object_type

--

col owner format a10

set numwidth 8

set lines 132

set pages 50

select DECODE(GROUPING(a.owner), 1, 'All Owners',

a.owner) AS "Owner",

count(case when a.object_type = 'TABLE' then 1 else null end) "Tables",

count(case when a.object_type = 'INDEX' then 1 else null end) "Indexes",

count(case when a.object_type = 'PACKAGE' then 1 else null end) "Packages",

count(case when a.object_type = 'SEQUENCE' then 1 else null end) "Sequences",

count(case when a.object_type = 'TRIGGER' then 1 else null end) "Triggers",

count(case when a.object_type not in

('PACKAGE','TABLE','INDEX','SEQUENCE','TRIGGER') then 1 else null end) "Other",

count(case when 1 = 1 then 1 else null end) "Total"

from dba_objects a

group by rollup(a.owner)

/

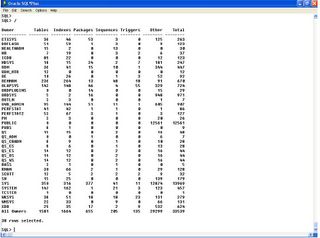

The above SQL will generate a crosstab report on Object owner verses counts of the various type of objects they own as well as totals across all columns:

SQL Result

In 9i and above, of particular use in generating crosstabs is the CASE structure, for example:

-- Crosstab of owner and object_type

--

col owner format a10

set numwidth 8

set lines 132

set pages 50

select DECODE(GROUPING(a.owner), 1, 'All Owners',

a.owner) AS "Owner",

count(case when a.object_type = 'TABLE' then 1 else null end) "Tables",

count(case when a.object_type = 'INDEX' then 1 else null end) "Indexes",

count(case when a.object_type = 'PACKAGE' then 1 else null end) "Packages",

count(case when a.object_type = 'SEQUENCE' then 1 else null end) "Sequences",

count(case when a.object_type = 'TRIGGER' then 1 else null end) "Triggers",

count(case when a.object_type not in

('PACKAGE','TABLE','INDEX','SEQUENCE','TRIGGER') then 1 else null end) "Other",

count(case when 1 = 1 then 1 else null end) "Total"

from dba_objects a

group by rollup(a.owner)

/

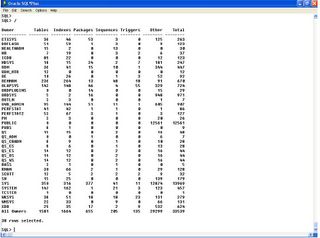

The above SQL will generate a crosstab report on Object owner verses counts of the various type of objects they own as well as totals across all columns:

SQL Result

As you can see, this is a very useful technique.

In older versions of Oracle where the CASE is not available use DECODE instead:

select DECODE(GROUPING(a.owner), 1, 'All Owners',

a.owner) AS "Owner",

count(decode( a.object_type,'TABLE',1,null)) "Tables",

count(decode( a.object_type,'INDEX' ,1,null)) "Indexes",

count(decode( a.object_type,'PACKAGE',1,null)) "Packages",

count(decode( a.object_type,'SEQUENCE',1,null)) "Sequences",

count(decode( a.object_type,'TRIGGER',1,null)) "Triggers",

count(decode( a.object_type,'PACKAGE',null,'TABLE',null,'INDEX',null,'SEQUENCE',null,'TRIGGER',null, 1)) "Other",

count(1) "Total"

from dba_objects a

group by rollup(a.owner)

/

In older versions of Oracle where the CASE is not available use DECODE instead:

select DECODE(GROUPING(a.owner), 1, 'All Owners',

a.owner) AS "Owner",

count(decode( a.object_type,'TABLE',1,null)) "Tables",

count(decode( a.object_type,'INDEX' ,1,null)) "Indexes",

count(decode( a.object_type,'PACKAGE',1,null)) "Packages",

count(decode( a.object_type,'SEQUENCE',1,null)) "Sequences",

count(decode( a.object_type,'TRIGGER',1,null)) "Triggers",

count(decode( a.object_type,'PACKAGE',null,'TABLE',null,'INDEX',null,'SEQUENCE',null,'TRIGGER',null, 1)) "Other",

count(1) "Total"

from dba_objects a

group by rollup(a.owner)

/

I have tested this in 8i, 9i and 10g and it works in all versions.

Thanks Gary for finding it and sharing it!

Thanks Gary for finding it and sharing it!