In my own laboratory (no, I don’t have an Igor, but he would come in handy to help dispose of dead computers now and then) I am running a two-node RAC cluster with each cluster node being a single 3 gigahertz 32 bit CPU with hyperthreading and 3 gigabytes of memory. The cluster interconnect is a single gigE Ethernet. As to storage, I utilize two JBOD arrays, a NexStor 18F and a NexStor 8F each fully loaded with 72 gigabyte 10K Seagate SCSI drive and connected to the servers via a Brocade 2250 with dual QLA2200 1gb HBAs on each server. The arrays themselves are connected through single 1 gb fibre channel connections. Oh, it is also running RedHat 4.0 Linux.

On the system in the remote lab, let’s call it TMSORCL for ease of identification, I have 4-3.6 Ghz CPUS, 4-2 port QLA2642 4 Gb HBAs (I used 1) and 8 gigabytes of memory. I have Oracle10g 10.1.0.2 installed, on the home lab (let’s call it AULTDB) I have Oracle11g, 11.1.0.2 installed.

AULTDB is utilizing ASM on two diskgroups. One diskgroup consists of 16 drives, 8 on the 18F and 8 on the 8F using normal redundancy and failgroups, the other diskgroup uses 8 drives on the 8f and uses external redundancy. I have placed the application data files and index files on the datagroup with the 16 drives and the rest (system, temp, user, undotbs, redo logs) on the 8 disk externally redundant datagroup. Using ORION (Oracle’s disk IO simulator) I was able to achieve nearly 6000 IOPS using 24 drives, so I hope that I can get near that using this configuration.

TMSORCL is only using the RAMSAN 400 through its 4 gb fibre channel connection for all database files. The RAMSAN should be able to produce enough IOPS to provide at least 100,000 through the single HBA (if it doesn’t saturate that is.)

Using Benchmark Factory from Quest Software I created two identical databases (you guessed it, TMSORCL and AULTDB.) These are TPCH type environments with a scale factor of 18, the largest I could support and still have enough temporary space to build indexes and run queries.

Now, some may be saying that I have set up an apples to oranges environment for comparison, and they would be correct, however, many folks will be facing just such a choice very soon, that is, to stick with an Oracle10g environment or upgrade to an 11g environment. Another question that many folks have, should I go to RAC? So this test is not as useless as you may have first thought.

I set up both TMSORCL and AULTDB to be as near in effective size (memory settings wise) allowing that AULTDB was spread across two 3 gigabyte memory areas allowing for a total memory footprint of about 3-4 GB, I set up the TMSORCL environment to have a maximum size of 4 GB with a target of 3 GB for memory.

I had some interesting problems with the number 5 query in the TPCH query set until I added a few additional indexes, it kept blowing out the temporary tablespace even though I had it as large as 50 gigabytes. If you want the script for the additional indexes, email me. I also had issues with parallel query slaves not releasing their temporary areas. Anyway, after adding the indexes and incorporating periodic database restarts into the test régime I was able to complete multiple full TPCH power runs on each machine (just the single user stream 0 power run for now).

So, before we get to the results, let’s recap:

AULTDB – 32 bit RedHat 4 Linux RAC environment with two single 3 Ghtz CPUS running hyperthreaded to simulate 4 CPU total in 6 gigabytes of memory utilizing 4-1gb QLA2200 HBAs to access 24-10K 72 gigabyte drives using ASM. Effective SGA 4 gigabytes.

TMSORCL – 64 bit RedHat 4.0 Linux single server with 4 3.6 Ghtz CPUS with 8 gigabytes of memory utilizing 1-4 gb HBA port to access a single 128 GB RAMSAN 400. Effective SGA constrained to 4 gigabytes.

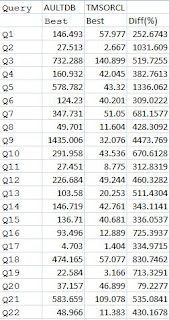

I ran the TPCH on each database (after getting stable runs) a total of 4 times. I will use the best run, as measured by total time to complete the 22 queries, for each database for the comparison runs. For AULTDB the best time to complete the run was 1:37:55 (One hour, thirty seven minutes and fifty-five seconds.) For TMSORCL the best time was 0:15:15 (zero hours, fifteen minutes and 15 seconds.) So on just raw, total time elapsed for identical data volumes, identical data contents and identical queries the TMSORCL database completed the runs 6.42 times faster (642%). The actual query timings in seconds are shown in the following chart. Based on summing the given query times the performance improvement factor from AULTDB to TMSORCL is 6.68 or 668% faster.

As you can see, TMSORCL beat out AULTDB on all queries with a range of 79% up to a whopping 4,474% improvement being shown based on the individual query times in seconds.

During the tests I monitored vmstat output at 5 second intervals, at no time did run queue length get over 3 and IO wait was less than 5% on both servers. This indicates that the IO subsystem never became over burdened, which of course was more of a concern with AULTDB rather than TMSORCL.

Now the TPCH benchmark is heavy on IOPS, so we would expect the database using the RAMSAN to perform better, and that is in fact what we are seeing, in spite of only having a single HBA and being on an older, less performing version of Oracle. So what conclusions can we draw from this test? Well, there are several:

For IOP heavy environments RAMSAN technology can improve performance by up to 700% against a JBOD array properly sized for the same application, depending on number and type of queries.

Use of a RAMSAN can delay moving to larger, more expensive sets of hardware or software if the concern is IO performance related.

Now there are several technologies out there that offer query acceleration, most of them place a large data cache in front of the disks to “virtualize” the data into what are essentially memory disks. The problem with these various technologies (including TimesTen from Oracle) is that there are coding issues, placement issues (what gets cached and what is left out?) and management issues, for example, with TimesTen there are logging and backup issues to contend with. In addition, utilities that use the hosts memory such as TimesTen add CPU as well as memory burden to what is probably an overloaded system.

What issues did I deal with using RAMSAN? Well, using the provided management interface GUI (via a web browser) I configured two logical units (LUNS), assigned them to the HBA talking to my Linux host and then refreshed the SCSI interface to see the LUNS. I then created a single EXT3 partition on each LUN and pointed the database creation with DBCA at those LUNs. Essentially the same exact things you would do with a disk you had just assigned to the system. The RAMSAN LUNs are treated exactly as you would a normal disk LUN (well, you have to grant execute permission to the owner, but other than that…) Now, if you don’t place the entire database on the RAMSAN then you have to make the choice of what files to place there, usually a look at a Statspack or AWR report will head you in the correct direction.

An interesting artifact from the test occurred on both systems, after a couple of repetitive runs the times would degrade on several queries, if I restarted the database, the times would return to near the previous good values. This artifact probably points to temporary space or undo tablespace cleanup and management issues.

I next intend to run some TPCC and maybe if I can get the needed infrastructure in place, a TPCE on each system, watch here for the results.